The power of an image

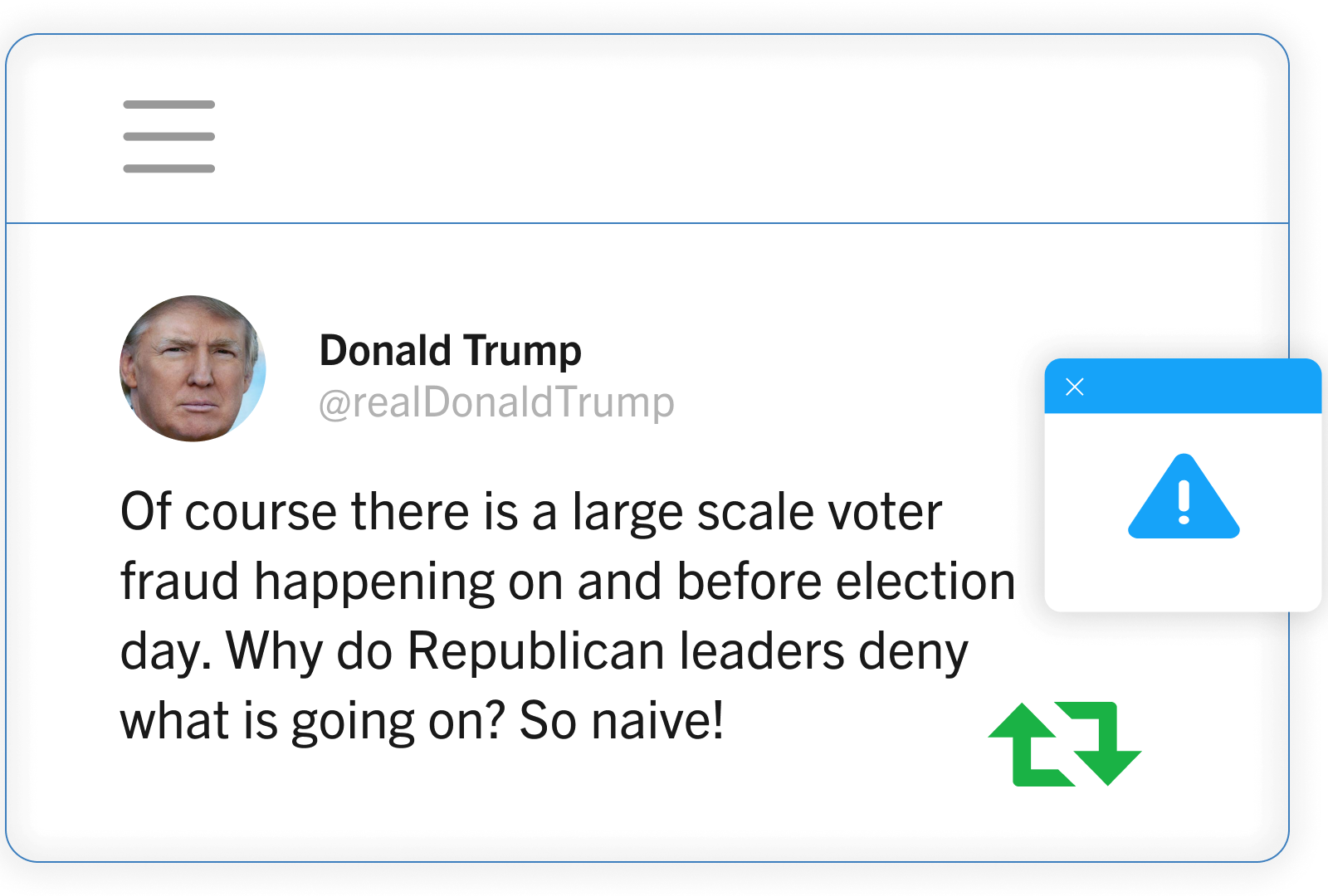

For now, making convincing deepfakes still requires expertise, but even poor forgeries find huge audiences on social media. Although crudely doctored, a shallowfake of US politician Nancy Pelosi got millions of views on Facebook, her slurred speech giving the impression that she was drunk or ill.

Most of us do not look closely for signs of manipulation. We treat audiovisual footage as irrefutable proof, relying on it to make judgments and inform our actions. Leaked conversations and images instantly discredit politicians, while videos of police brutality drive us to demand reform. Deepfake technology leverages this instinctive trust in the medium to trick us.

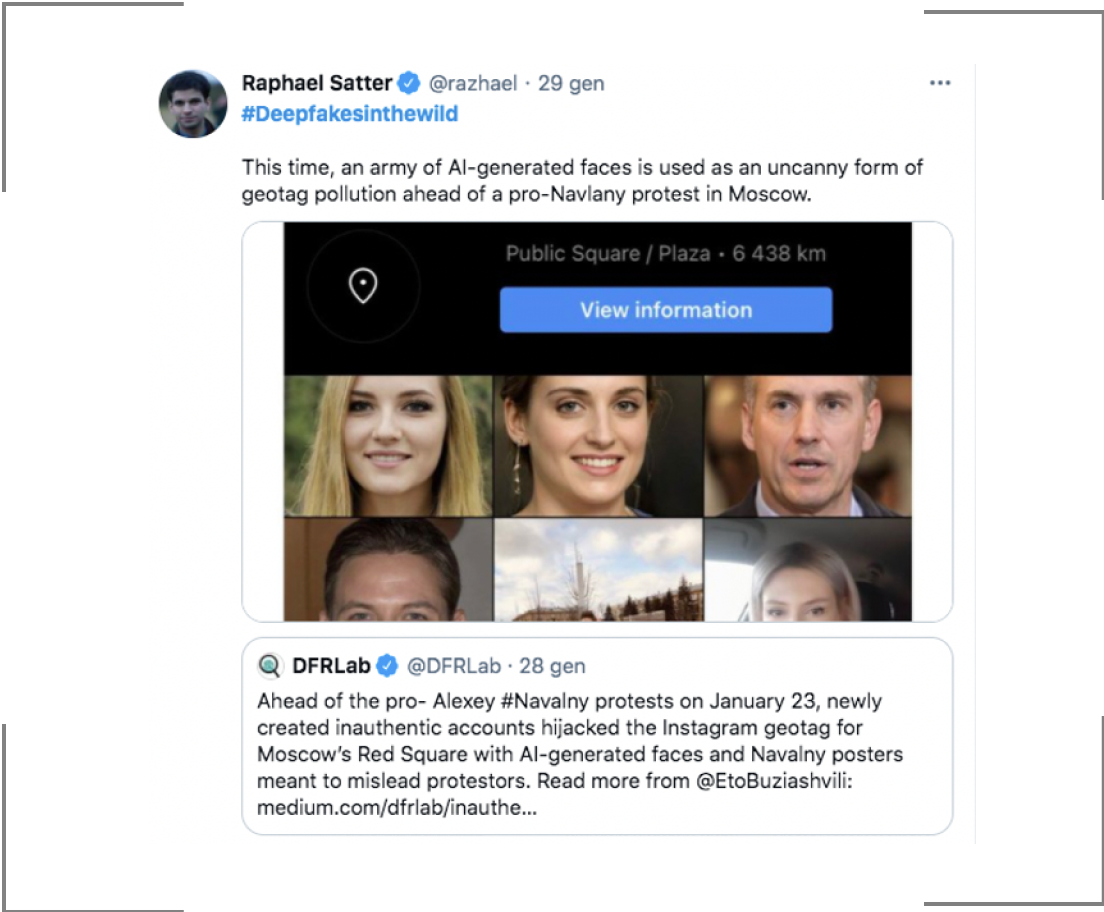

Deepfakes are eerily effective tools of deception and are quickly turning into a powerful agent of social and political instability. Our capacity for constructive debate may be severely diminished as our collective grasp on reality becomes more precarious.

Answers not included

Given breakneck technological advancement, digital forgeries will only become harder to detect.

Technology firms propose safeguards, like embedding software in cameras to create video watermarks. But the threat runs deeper; deepfakes can reach and influence many in the time it takes to authenticate content.

Laws against publishing deepfakes are also hard to enforce and do not target their most pernicious effects. A year after China introduced regulations on distribution, fraudsters used deepfakes to hack government-run facial recognition systems.

Yet, facial identification systems are being introduced everywhere, from unlocking our phones to making payment for our purchases. With technology mediating more interactions and transactions, deepfakes have a greater potential to harm even the ordinary citizen.

The dangers are imminent and there is no clear solution yet. Whatever we opt to do, it will take a concerted effort from all aspects of society to stem this new agent of division.