Over the past year, streaming not only met our individual needs and preferences but also brought people with shared interests together.

Solidarity in streaming, or life in a bubble?

Streaming platforms allow communities to organise and demonstrate their collective power from wherever they are.

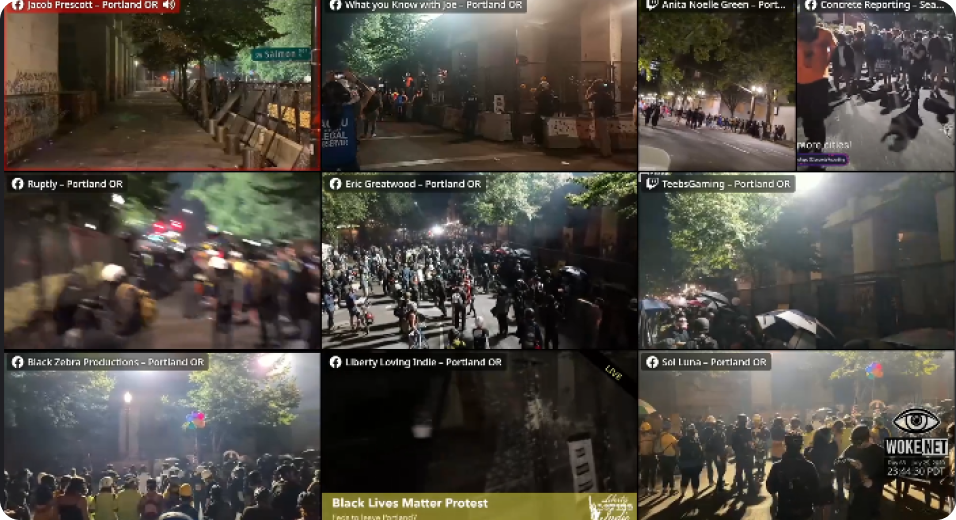

We saw this last summer as Black Lives Matter protestors streamed demonstrations from multiple US cities following the death of George Floyd. Woke.net’s Twitch channel pulled simultaneous broadcasts of these protests from all over into a single feed, showcasing the strength and solidarity of the movement.

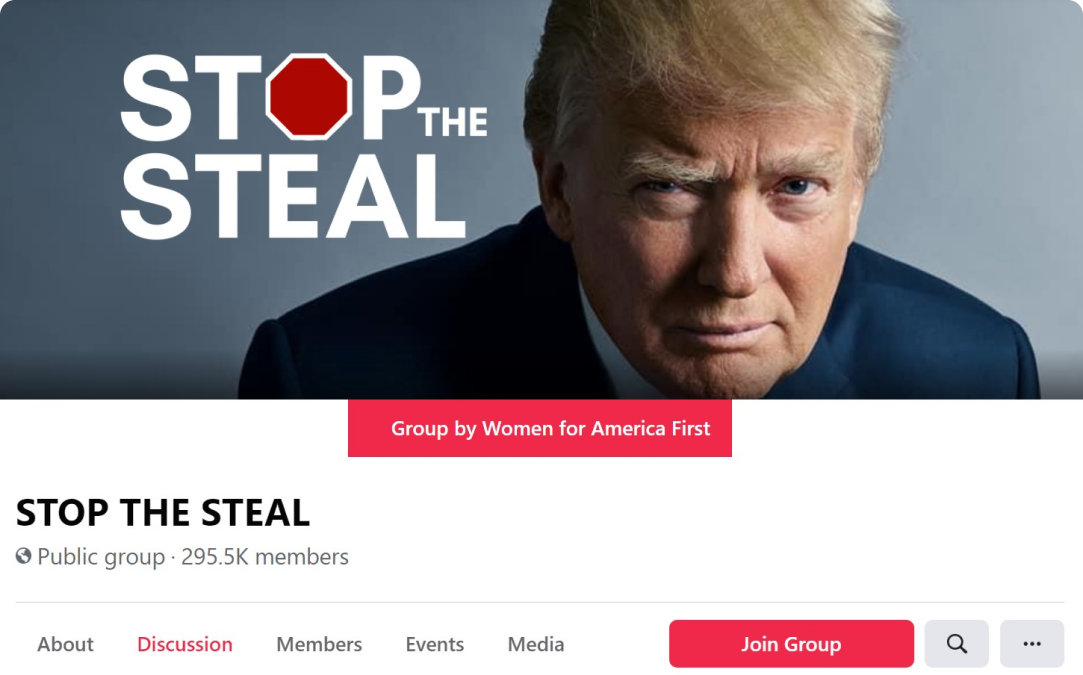

However, the same algorithms that create communities may also produce “filter bubbles” that distort the information people receive. Such echo chambers, like the one that alleges the 2020 US election was “stolen”, reinforce mistruths rather than expose people to different views.

Over time, these “bubbles” have a polarising effect, legitimising hate speech and violence towards certain communities.

The road to insurrection

A study showed that 64 per cent of users who joined extremist groups on Facebook did so because of its algorithm’s recommendations. Similarly, YouTube was found to be the most discussed cause of “red-pilling” in far-right chat rooms — an Internet slang that means converting someone to fascist, racist and anti-Semitic beliefs.

Used uncritically, these technological tools render people more susceptible to misinformation. On the morning of January 6, 2021, stoked by weeks of anger at the election being “stolen”, a pro-Trump mob stormed the Capitol. Many alt-right personalities live-streamed the chaos to online communities who cheered them on.